Hello there! I’m Guodong Du, currently pursuing my PhD at The Hong Kong Polytechnic University (PolyU). Before that, I was a research assistant at Knowledge and Language Computing Lab @Harbin Institute of Technology (Shenzhen). Prior to joining HITSZ, I was a research intern and master student in Learning and Vision Lab @National University of Singapore, advised by Professor Xinchao Wang and Jiashi Feng. Besides, I had been working as an intern in the area of low level computer vision, mentored by Xueyi Zou, in Huawei Noah’s Ark Lab, Shenzhen, China.

My research interest includes knowledge transfer, fusion and compression, multimodal, heuristic algorithms, spiking neural networks and low level computer vision.

🔥 News

Good News Bad News.

- 2025.08: 🎉 One co-corresponding paper is accepted by EMNLP 2025 main. Thanks to all my collaborators!!

- 2025.05: 🎉 One first-author paper and one co-first author paper are accepted by ACL 2025 main. Thanks to all my collaborators!!

- 2024.09: 🎉 One first author papers is accepted by NeurIPS 2024. Thanks to all my collaborators!!

- 2024.05: 🎉 One first author paper is accepted by ACL 2024 Findings. Thanks to all my collaborators!!

📝 Recent Projects

- Knowledge Fusion: A Comprehensive Survey. Github Repo,

📝 Publications

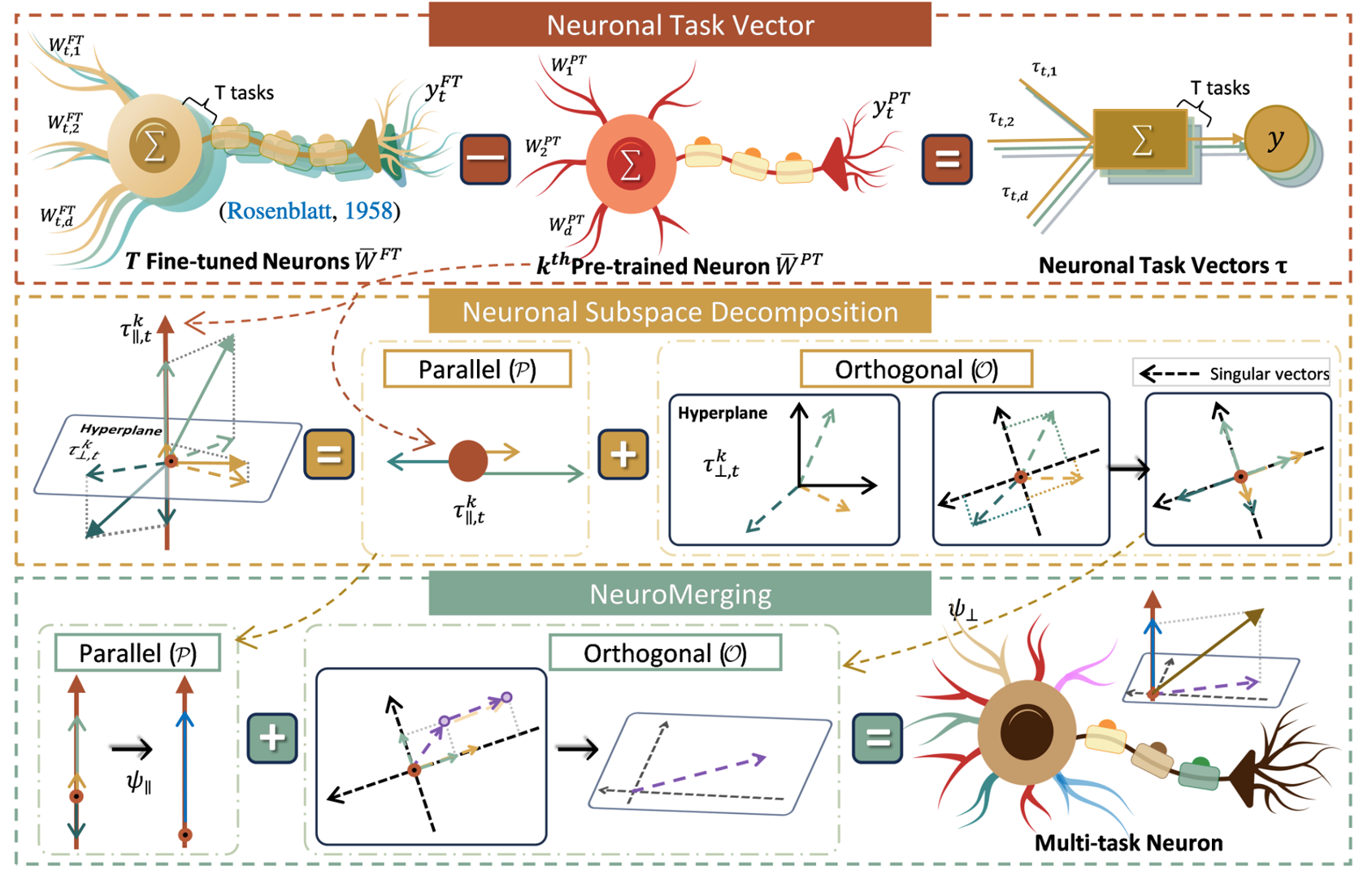

Zitao Fang, Guodong Du,* Jing Li, Ho-Kin Tang, Sim Kuan Goh

- we introduced NeuroMerging, a novel merging framework developed to mitigate task interference within neuronal subspaces, enabling training-free model fusion across diverse tasks.

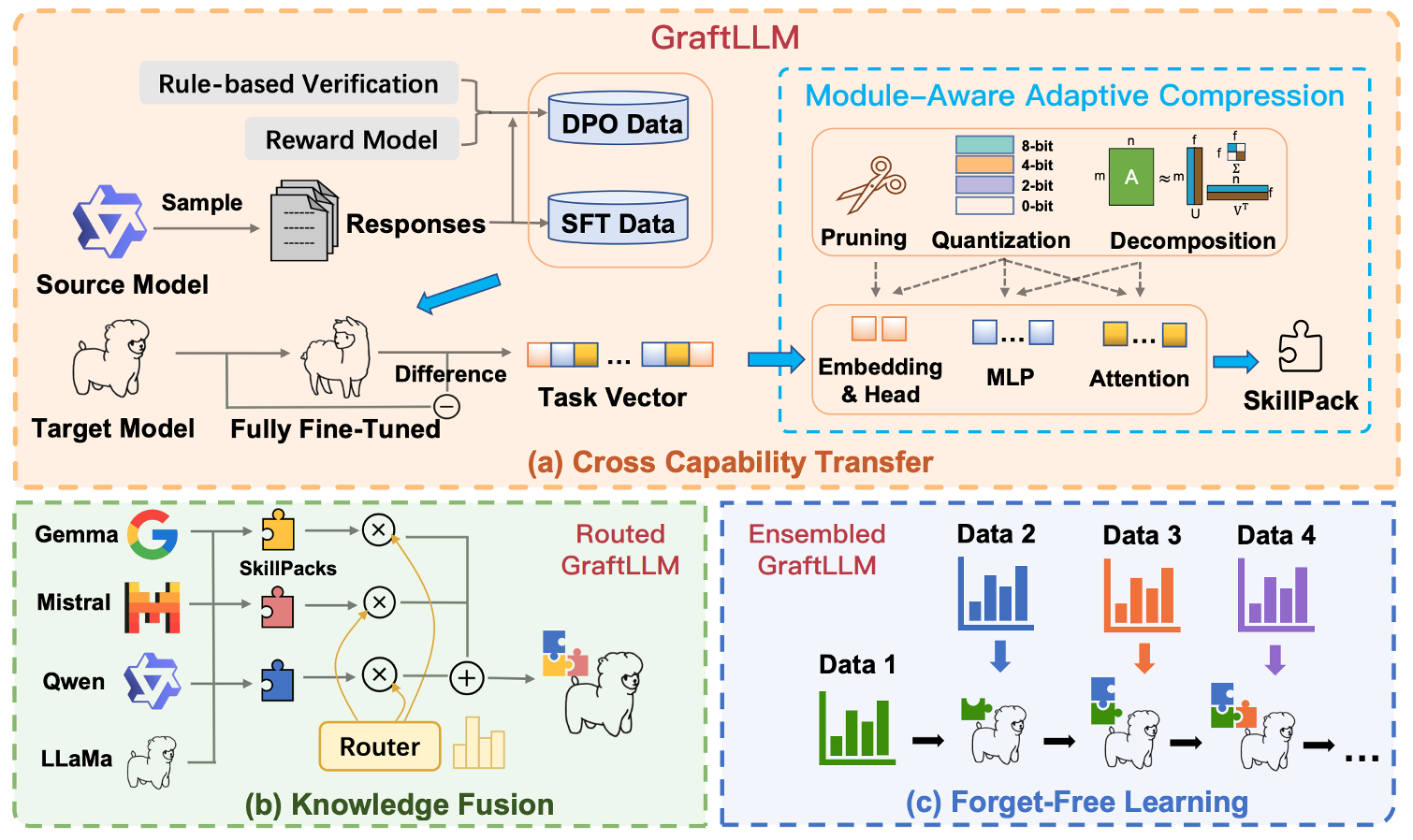

Knowledge Grafting of Large Language Models

Guodong Du, Xuanning Zhou, Junlin Lee, Zhuo Li, Wanyu Lin, Jing Li

- We propose a knowledge grafting approach that efficiently transfers capabilities from heterogeneous LLMs to a target LLM through modular SkillPacks.

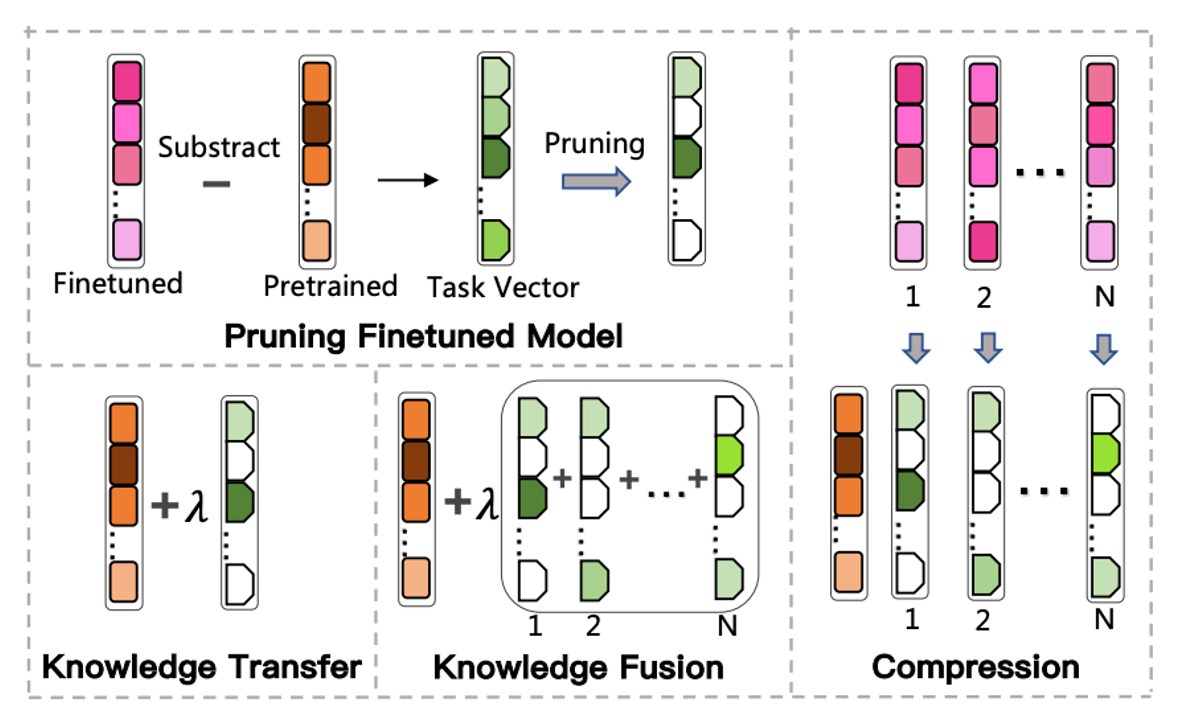

Neural Parameter Search for Slimmer Fine-Tuned Models and Better Transfer

Guodong Du, Zitao Fang, Junlin Lee, Runhua Jiang, Jing Li

- We propose Neural Parameter Search to enhance the efficiency of pruning fine-tuned models for better knowledge transfer, fusion, and compression of LLMs.

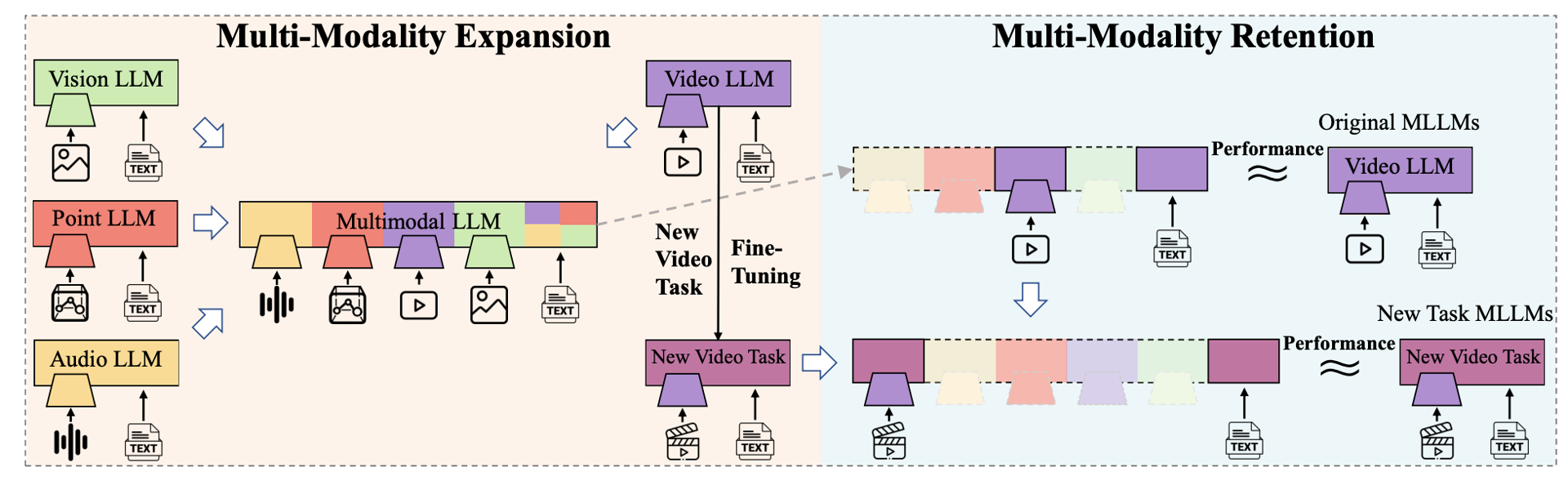

Multi-Modality Expansion and Retention for LLMs through Parameter Merging and Decoupling

Junlin Lee, Guodong Du*, Wenya Wang, Jing Li

- We propose MMER (Multi-modality Expansion and Retention), a training-free approach that integrates existing MLLMs for effective multimodal expansion while retaining their original performance.

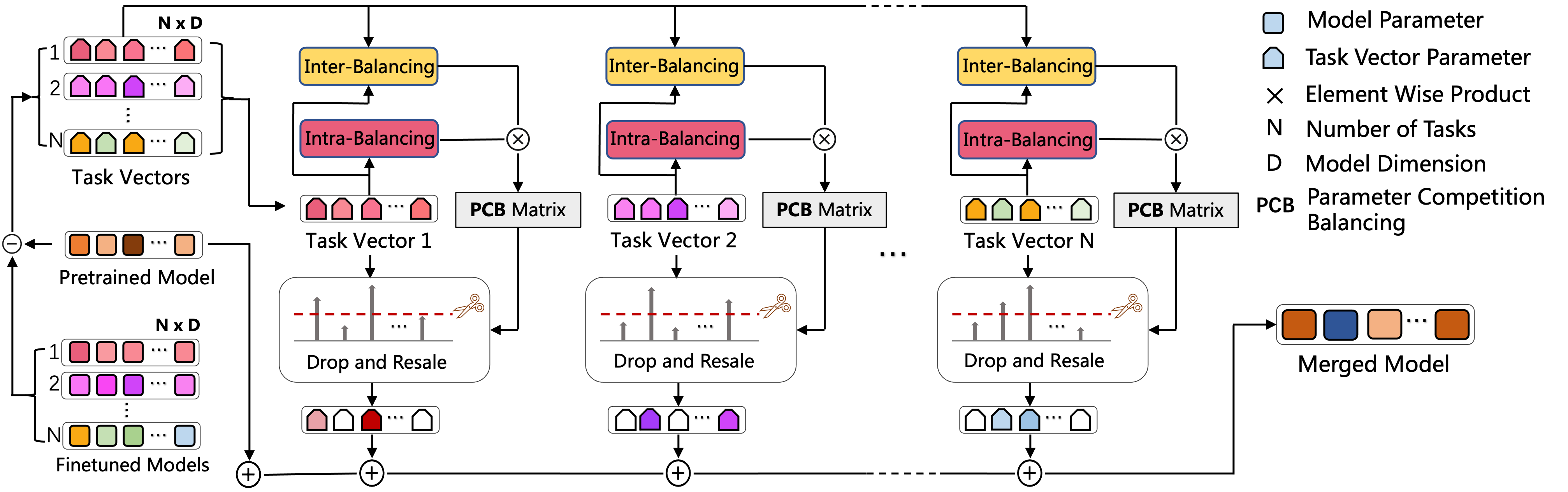

Parameter Competition Balancing for Model Merging

Guodong Du, Junlin Lee, Jing Li, Hanting Liu, Runhua Jiang, Shuyang Yu, Yifei Guo, Sim Kuan Goh, Ho-Kin Tang, Min Zhang

- We re-examine existing model merging methods, emphasizing the critical importance of parameter competition awareness, and introduce PCB-Merging, which effectively adjusts parameter coefficients.

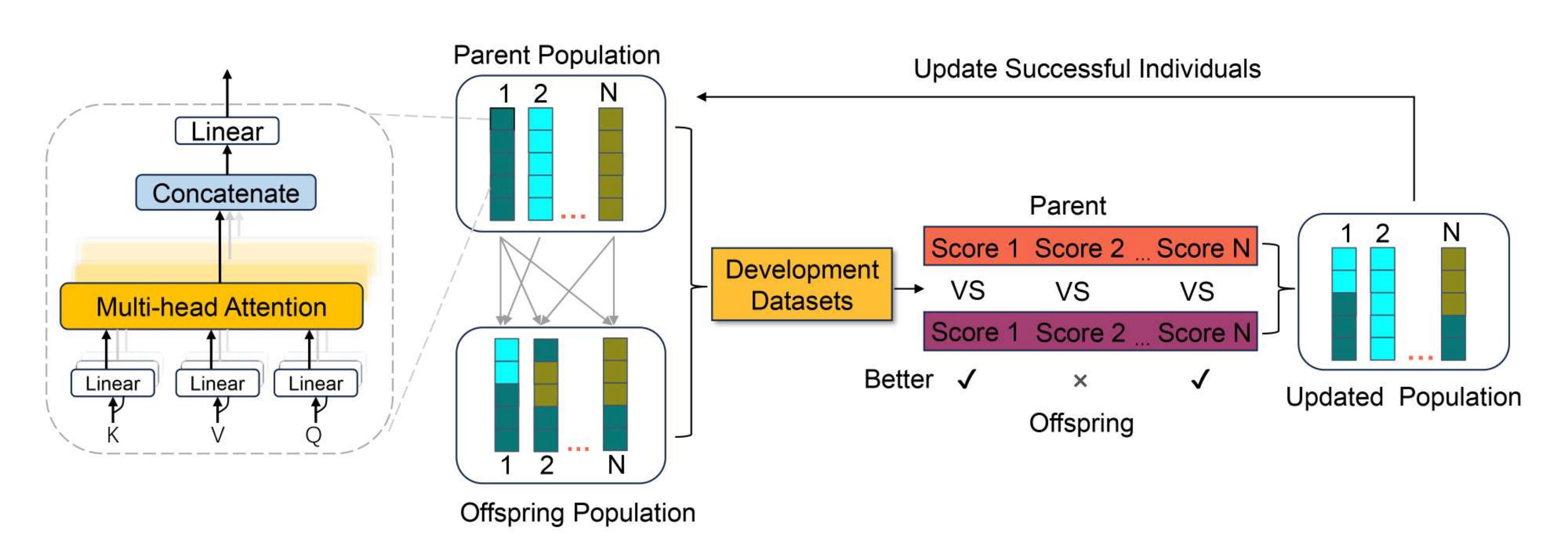

Knowledge Fusion By Evolving Weights of Language Models

Guodong Du, Jing Li, Hanting Liu, Runhua Jiang, Shuyang Yu, Yifei Guo, Sim Kuan Goh, Ho-Kin Tang

- Model Evolution is the first approach to evolve neural parameters using Differential Evolutionary Algorithms. We introduce a novel knowledge fusion method by evolving weights of (large) language models.

IEEE SMC 2024Impacts of Darwinian Evolution on Pre-trained Deep Neural Networks, Guodong Du, et al.IJCNN 2024CADE: Cosine Annealing Differential Evolution for Spiking Neural Network, Runhua Jiang*, Guodong Du*, et al.IEEE SMC 2024Evolutionary Neural Architecture Search for 3D Point Cloud Analysis, Yisheng Yang, Guodong Du, et al.IEEE Cyber 2024MOESR: Multi-Objective Evolutionary Algorithm for Image Super-Resolution, Guodong Du, et al.CVPR 2021 WorkshopNTIRE 2021 challenge on video super-resolution, 3rd Place Award.ArXiv 2020End-to-end Rain Streak Removal with RAW Images, Guodong Du, et al.TIST 2020Learning generalizable and identity-discriminative representations for face anti-spoofing Xiaoguang Tu, Guodong Du, et al. (ACM Transactions on Intelligent Systems and Technology)

( *: Co-first Author )

🎖 Honors and Awards

- 2021.10 3rd Place Award in NTIRE 2021 Challenge on Video Super-resolution, Track I. (New Trends in Image Restoration and Enhacement Workshop, CVPR2021)

- 2017.09 National Encouragement scholarship

- 2016.05 Honorable Award in MCM (The Mathematical Contest in Modeling)

- 2016.05 National Encouragement scholarship

- 2015.05 National scholarship

📖 Educations

- 2019.09 - 2021.05, Part-time PhD student, National University of Singapore (NUS)

- 2018.08 - 2019.05, M.S., National University of Singapore (NUS)

- 2016.07 - 2016.12, Visiting. City University of Hong kong (CityU) (Non-degree Undergraduate Exchange)

- 2014.08 - 2018.06, B.S., University of Electronic Science and Technology of China (UESTC)

💻 Internships

- 2024.03 - 2024.09, Knowledge and Language Computing Lab, Shenzhen, China.

- 2023.03 - 2024.09, Harbin Institute of Technology (Shenzhen), China.

- 2019.09 - 2021.09, Learning and Vision Lab, Singapre.

- 2020.08 - 2021.04, Huawei Noah’s Ark Lab, Shenzhen, China.

- 2019.01 - 2019.09, Biomind, Singapore.

- 2018.01 - 2018.04, YoueData, Chengdu, China